17. March 2022

Whether it be in the form of image recognition, speech recognition or recommendation algorithms on relevant video portals, artificial intelligence (AI) is increasingly finding its way into our everyday lives. But if we’re supposed to let AI drive ours in the future, it will need to work reliably at all times, and any form of manipulation must be ruled out. In a joint project with the automotive supplier ZF and the Federal Office for Information Security (BSI), Vasilios Danos and his team from TÜViT are investigating how and according to which criteria AI can be tested for use in self-driving cars.

What is the goal of the joint project with ZF and BSI?

Vasilios Danos: Software has been used in cars for quite some time and must meet a number of criteria. But the problem here is that these criteria can’t be easily applied to artificial intelligence. This is because AI systems are usually a black box: we can’t know for sure what they’ve learned and how they will behave in the future. So, in the context of this project, we’re aiming to work out which criteria can be applied to these AI systems and how they can be checked in practice to ensure the appropriate level of security. In the first instance, we’re gaming this with two specific automotive AI functionalities. In the next stage, we’ll then go on to develop some general rules that will also be applicable to other functions in self-driving cars.

Which failure or attack scenarios are conceivable – and what would be their consequences?

One area of vulnerability is traffic sign recognition: Even if leaves or stickers get stuck to a stop sign, we humans can still clearly recognise it for what it is. AI, on the other hand, might confuse the sign with an advertising hoarding. Or worse, interpret it as a right of way sign. And while the AI in vehicle control system is quite well secured against hacker attacks, attackers could take advantage of these weaknesses in perception of the surroundings to manipulate the systems: for example, by attaching small stickers to traffic signs in line with certain procedures, making very subtle changes that we wouldn’t even notice, but which would change the entire picture for AI. Turning a 30 km/h sign into a 130 km/h sign, for instance. In this respect, IT security and general safety are two sides of the same coin. If we can ensure that the systems are well defended against deliberate manipulation, they’ll also be immune to natural disruptions.

„Many of the testing methods for AI are currently still in the research phase. The problem here is that an incredible number of variables would have to be taken into account.“

How, for example, can the reliability of traffic sign recognition be verified?

Many of the testing methods for AI are currently still in the research phase. And one of the priorities of the project is also to identify suitable methods. An apparently obvious possibility would be to feed and test the AI with sample traffic signs. But the problem here is that an incredible number of variables would have to be taken into account. These would include different lighting conditions and weather conditions – rain, snow or fog – or the possibility that the sign might be crooked or dirty. Covering all these cases with sample images simply isn’t possible. So, a more promising approach would be to look for AI’s “learning disabilities” and push it to its limits in a targeted manner.

© iStockDifficult conditions: AI is used in autonomous driving and must also be able to reliably recognise half-snow-covered traffic signs.

And how exactly do you go about grilling AI for information?

We start by looking at the situations which cause the system the most problems. With AI for traffic sign recognition, for example, these would include certain lighting conditions. Detecting pedestrians, on the other hand, is always a problem when people wear colourful hats – for whatever reason. We use findings like these to keep drilling down to see when and at what point the AI gets it wrong.

What other methods are suitable for AI testing?

You could use an explainable or inherently secure AI system from the outset. At the moment, you mainly get the black box systems that I mentioned at the start. With these systems, the rules aren’t programmed into the AI but learned independently from training data. On the one hand, these systems are relatively cheap and easy to develop. On the other, however, the behaviour of AI can’t be reliably predicted. However, there are also other AI architectures from which the rules by which AI operates can be derived, at least in part. So, you can determine in advance that it will react safely under certain circumstances. However, these methods are still in their infancy and can’t be used everywhere, at least for the time being. So, as part of the project, we also want to determine which functions in self-driving cars these AI architectures might be suitable for in the future.

What conclusions would manufacturers and regulators have to draw from the results of AI tests?

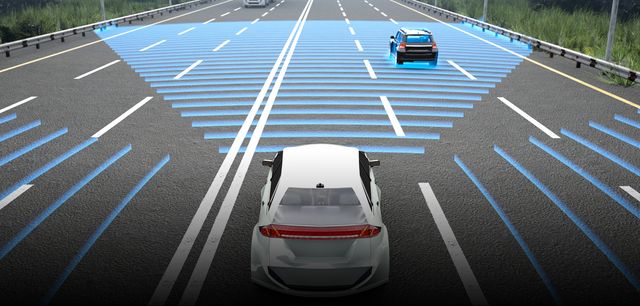

The test can be used to determine the probability that AI will get things wrong. On the basis of these results, the relevant authorities will then have to decide whether this risk is tolerable or not. However, the test may also show that the corresponding system can’t be made more robust. Then the manufacturers could counteract this with appropriate backup functionalities, with which, let’s say, the traffic situation isn’t only assessed based on camera images, but also with additional sensors, such as radar and LiDAR. After all, many vehicle systems are already being designed in this way. Ultimately, however, it’ll come down to certain criteria that the manufacturers must meet – how and with what measures will then be up to them. This is no different outside the AI field, for example with the DIN standards.

What is it about artificial intelligence that fascinates you so much?

I’m fascinated by the fact that we can already copy the functioning of the nerve cells of the brain relatively well with what are, in mathematical terms, comparatively humdrum learning methods. Until now, many activities have been the sole preserve of humans. And every single person has to be painstakingly trained. With AI, the hope is that, once it’s been trained, it can be copied as often as desired. This offers enormous potential for automation. And AI development is accelerating all the time: more progress is being made in AI each year than in the past 20 years put together. AI is going to play a major role in more and more areas in the future. And today we must agree on where we want to draw the security boundaries, which systems we want to allow and which we don’t. Our project aims to help provide answers to these questions.

About Vasilios Danos:

© TÜV NORD

Vasilios Danos is a consultant for cyber and AI security at TÜViT. The graduate electrical engineer dealt with the possibilities of artificial intelligence and neural networks while still at university. In 2006 he was a member of a university team which took part in the Robosoccer World Championships.